NeurIPS 2024 Wrapped 🌯

Dec 30, 2024· ·

4 min read

·

4 min read

muckrAIkers

What happens when you bring over 15,000 machine learning nerds to one city? If your guess didn't include racism, sabotage and scandal, belated epiphanies, a spicy SoLaR panel, and many fantastic research papers, you wouldn't have captured my experience. In this episode we discuss the drama and takeaways from NeurIPS 2024.

EPISODE RECORDED 2024.12.22

Gallery

Chapters

00:00:00 ❙ Recording date

00:00:05 ❙ Intro

00:00:44 ❙ Obligatory mentions

00:01:54 ❙ SoLaR panel

00:18:43 ❙ Test of Time

00:24:17 ❙ And now: science!

00:28:53 ❙ Downsides of benchmarks

00:41:39 ❙ Improving the science of ML

00:53:07 ❙ Performativity

00:57:33 ❙ NopenAI and Nanthropic

01:09:35 ❙ Fun/interesting papers

01:13:12 ❙ Initial takes on o3

01:18:12 ❙ WorkArena

01:25:00 ❙ Outro

00:00:05 ❙ Intro

00:00:44 ❙ Obligatory mentions

00:01:54 ❙ SoLaR panel

00:18:43 ❙ Test of Time

00:24:17 ❙ And now: science!

00:28:53 ❙ Downsides of benchmarks

00:41:39 ❙ Improving the science of ML

00:53:07 ❙ Performativity

00:57:33 ❙ NopenAI and Nanthropic

01:09:35 ❙ Fun/interesting papers

01:13:12 ❙ Initial takes on o3

01:18:12 ❙ WorkArena

01:25:00 ❙ Outro

Links

Note: many workshop papers had not yet been published to arXiv as of preparing this episode, the OpenReview submission page is provided in these cases.

- NeurIPS statement on inclusivity

- CTOL Digital Solutions article - NeurIPS 2024 Sparks Controversy: MIT Professor’s Remarks Ignite “Racism” Backlash Amid Chinese Researchers’ Triumphs

- (1/2) NeurIPS Best Paper - Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction

- Visual Autoregressive Model report this link now provides a 404 error

- Don’t worry, here it is on archive.is

- Reuters article - ByteDance seeks $1.1 mln damages from intern in AI breach case, report says

- CTOL Digital Solutions article - NeurIPS Award Winner Entangled in ByteDance’s AI Sabotage Accusations: The Two Tales of an AI Genius

- Reddit post on Ilya’s talk

- SoLaR workshop page

Referenced Sources

- Harvard Data Science Review article - Data Science at the Singularity

- Paper - Reward Reports for Reinforcement Learning

- Paper - It’s Not What Machines Can Learn, It’s What We Cannot Teach

- Paper - NeurIPS Reproducibility Program

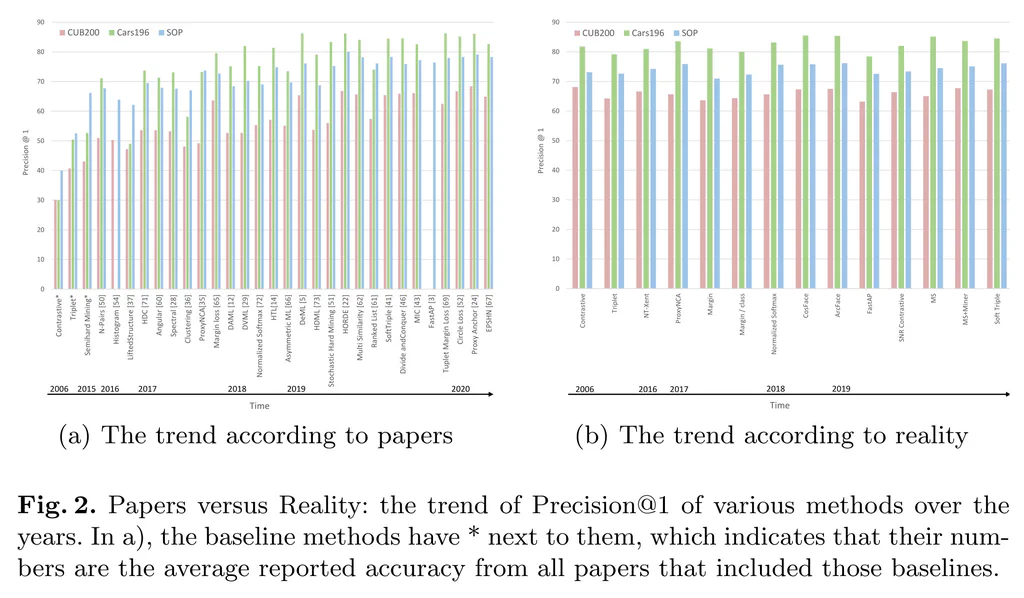

- Paper - A Metric Learning Reality Check

Improving Datasets, Benchmarks, and Measurements

- Tutorial video + slides - Experimental Design and Analysis for AI Researchers (note: I think you need to have attended NeurIPS to access the recording)

- Paper - BetterBench: Assessing AI Benchmarks, Uncovering Issues, and Establishing Best Practices

- Paper - Safetywashing: Do AI Safety Benchmarks Actually Measure Safety Progress?

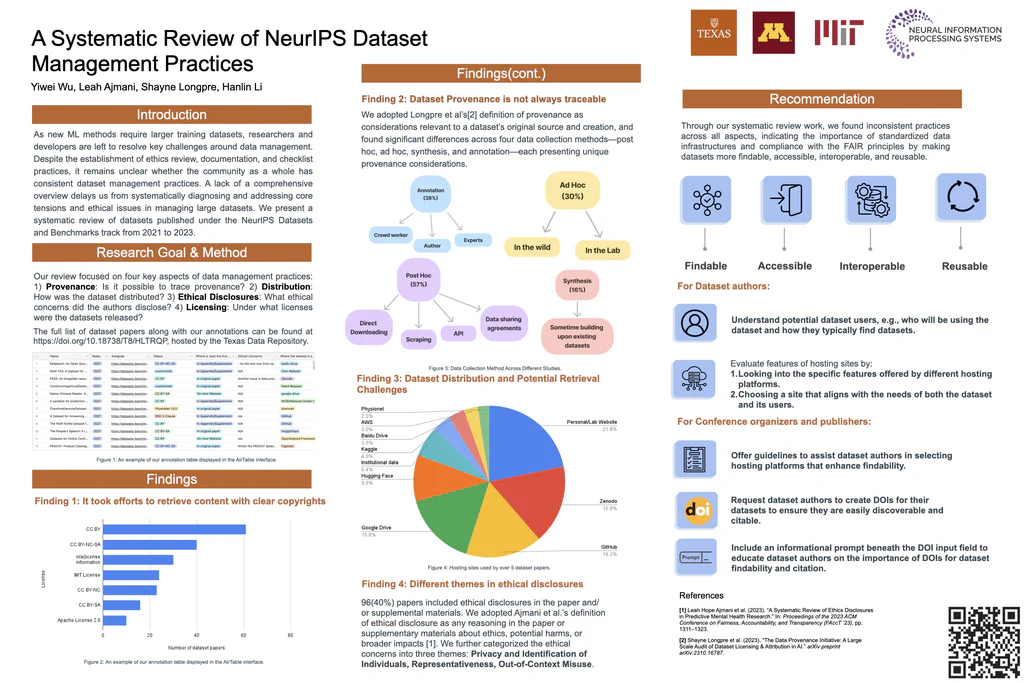

- Paper - A Systematic Review of NeurIPS Dataset Management Practices

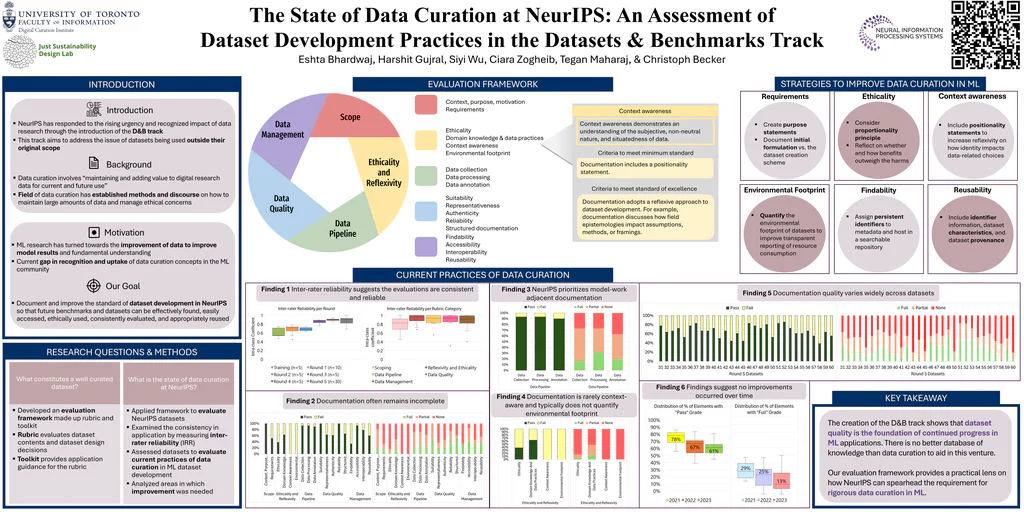

- Paper - The State of Data Curation at NeurIPS: An Assessment of Dataset Development Practices in the Datasets and Benchmarks Track

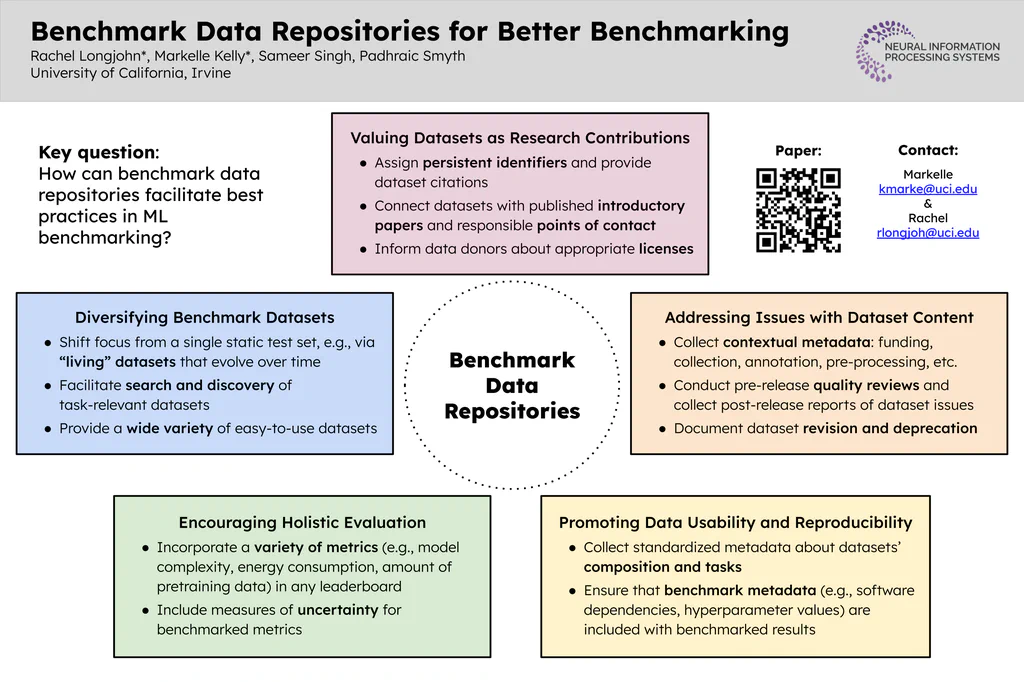

- Paper - Benchmark Repositories for Better Benchmarking

- Paper - Croissant: A Metadata Format for ML-Ready Datasets

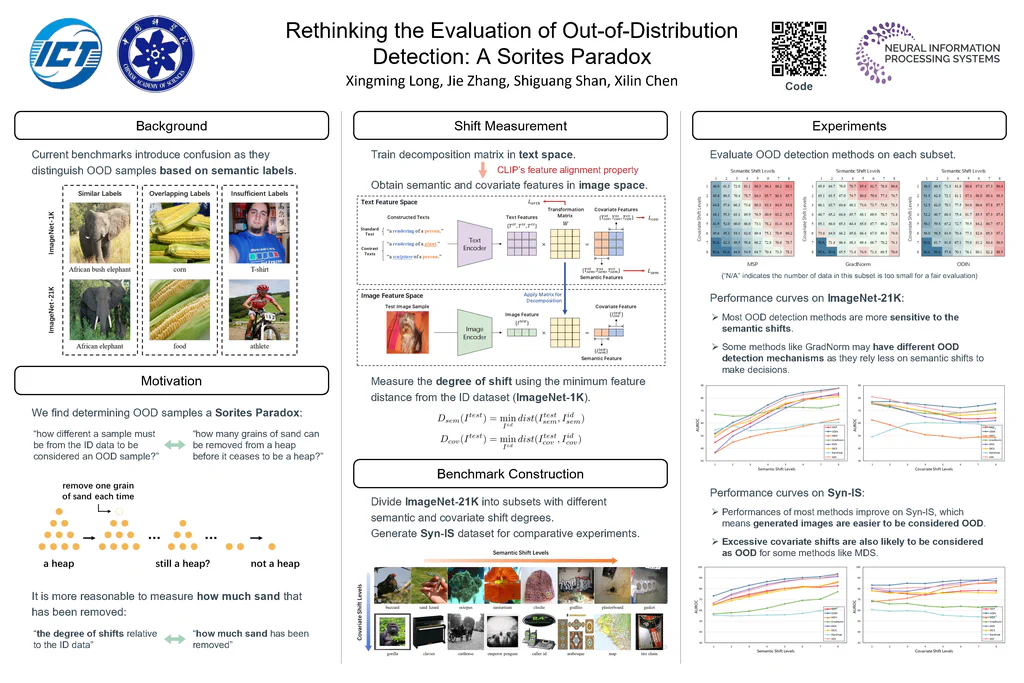

- Paper - Rethinking the Evaluation of Out-of-Distribution Detection: A Sorites Paradox

- Paper - Evaluating Generative AI Systems is a Social Science Measurement Challenge

- Paper - Report Cards: Qualitative Evaluation of LLMs

Governance Related

- Paper - Towards Data Governance of Frontier AI Models

- Paper - Ways Forward for Global AI Benefit Sharing

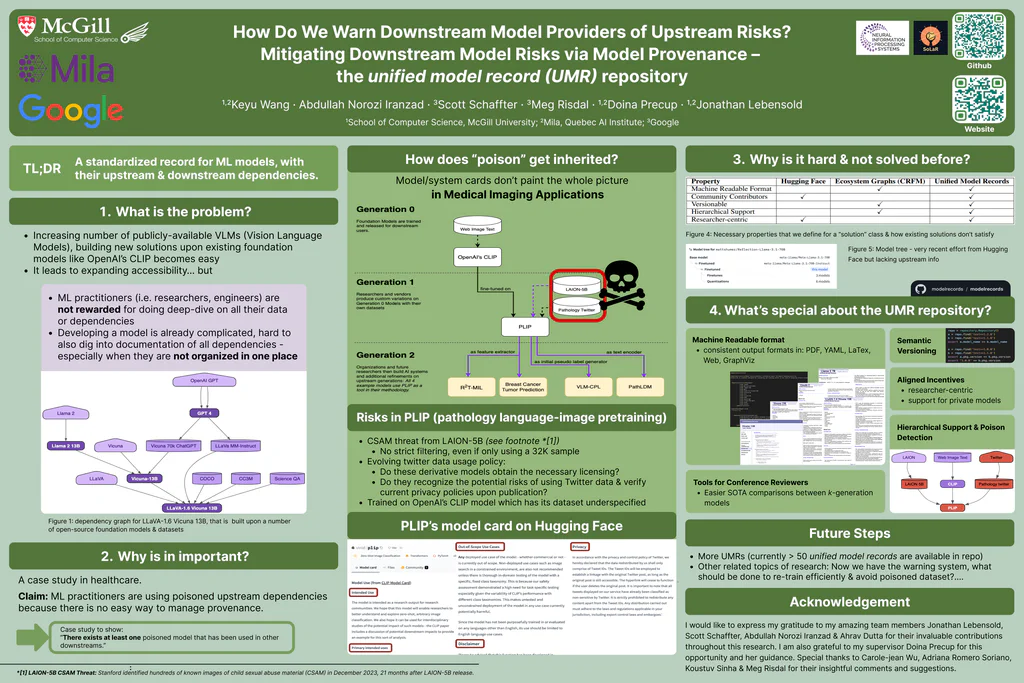

- Paper - How do we warn downstream model providers of upstream risks?

- Unified Model Records tool

- Paper - Policy Dreamer: Diverse Public Policy Creation via Elicitation and Simulation of Human Preferences

- Paper - Monitoring Human Dependence on AI Systems with Reliance Drills

- Paper - On the Ethical Considerations of Generative Agents

- Paper - GPAI Evaluation Standards Taskforce: Towards Effective AI Governance

- Paper - Levels of Autonomy: Liability in the age of AI Agents

Certified Bangers + Useful Tools

- Paper - Model Collapse Demystified: The Case of Regression

- Paper - Preference Learning Algorithms Do Not Learn Preference Rankings

- LLM Dataset Inference paper + repo

- dattri paper + repo

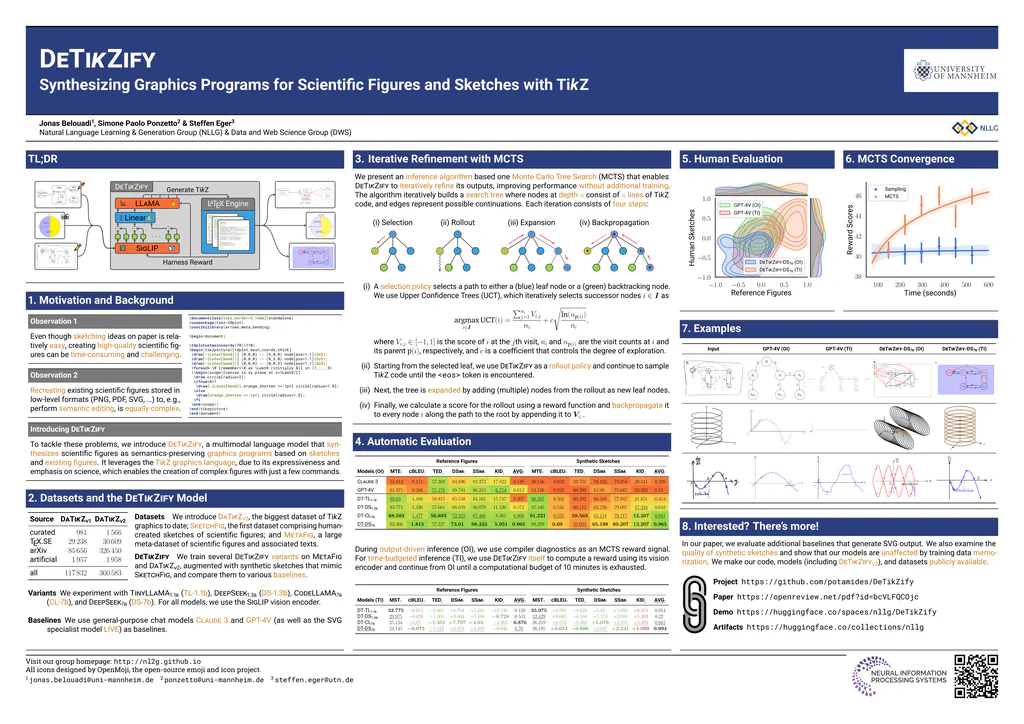

- DeTikZify paper + repo

Fun Benchmarks/Datasets

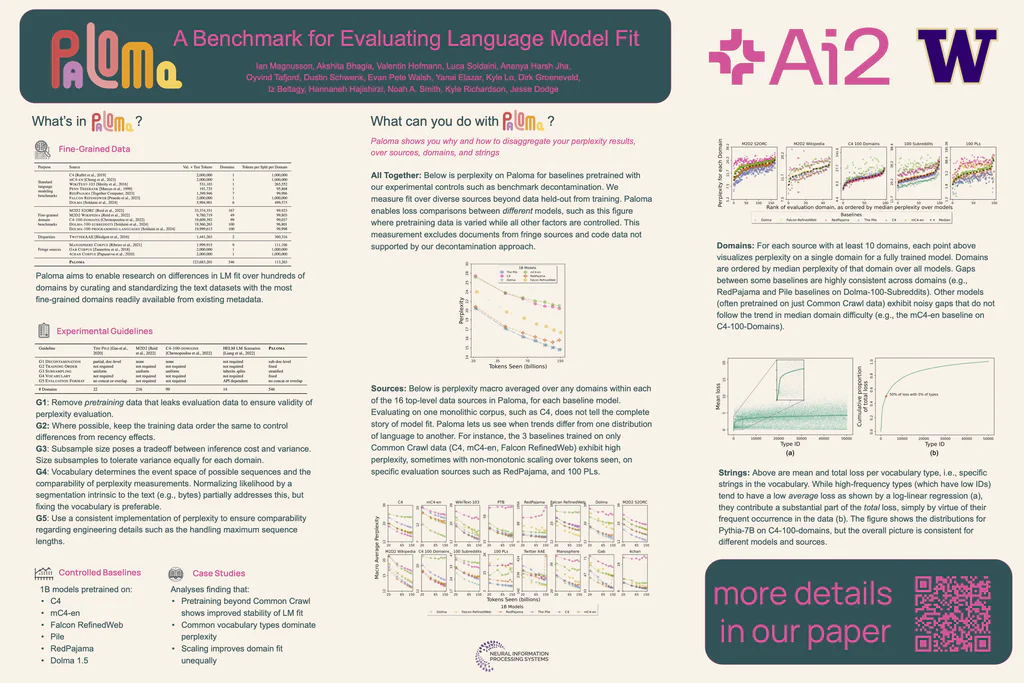

- Paloma paper + dataset

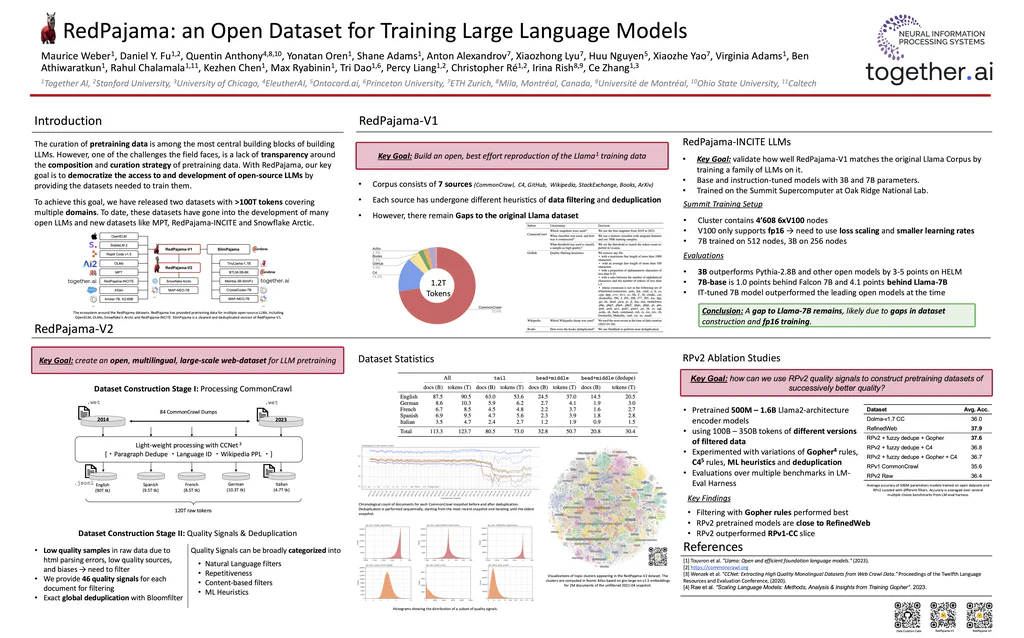

- RedPajama paper + dataset

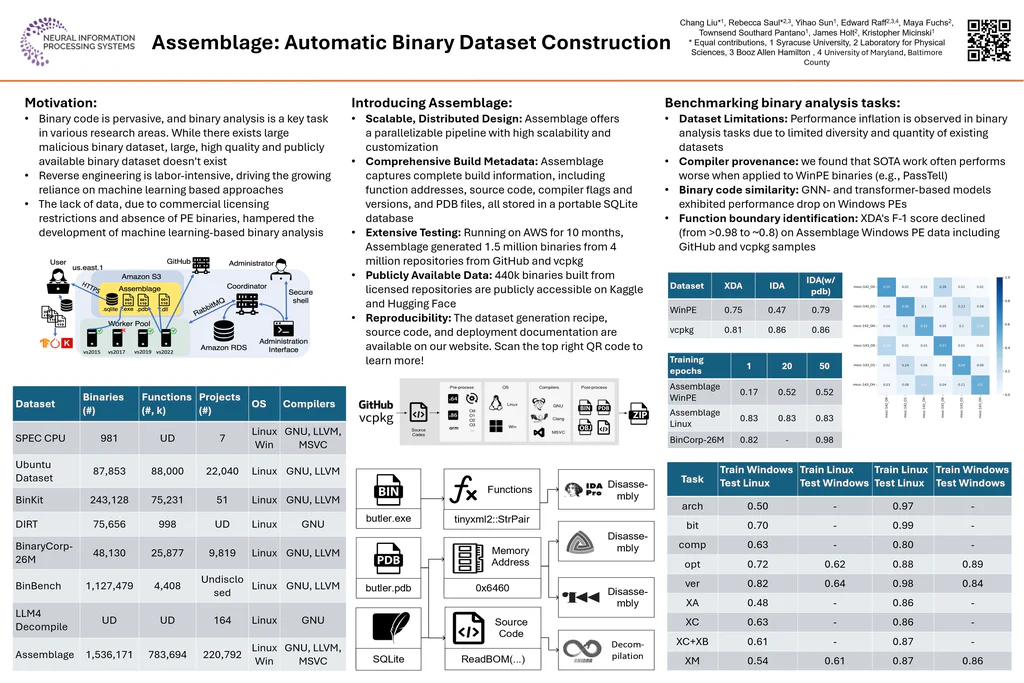

- Assemblage webpage

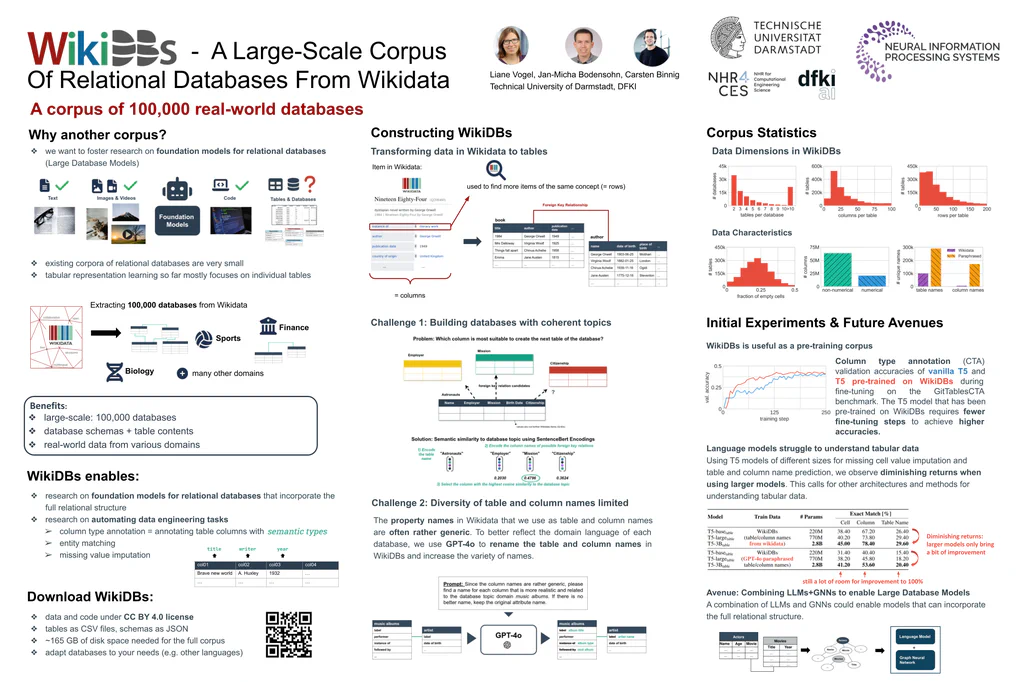

- WikiDBs webpage

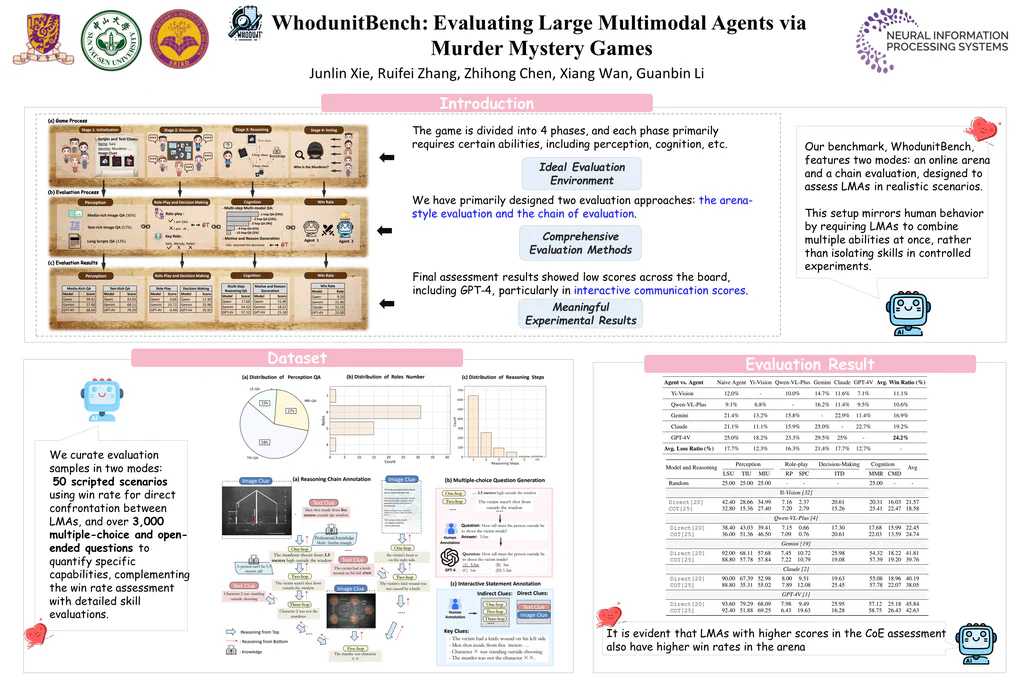

- WhodunitBench repo

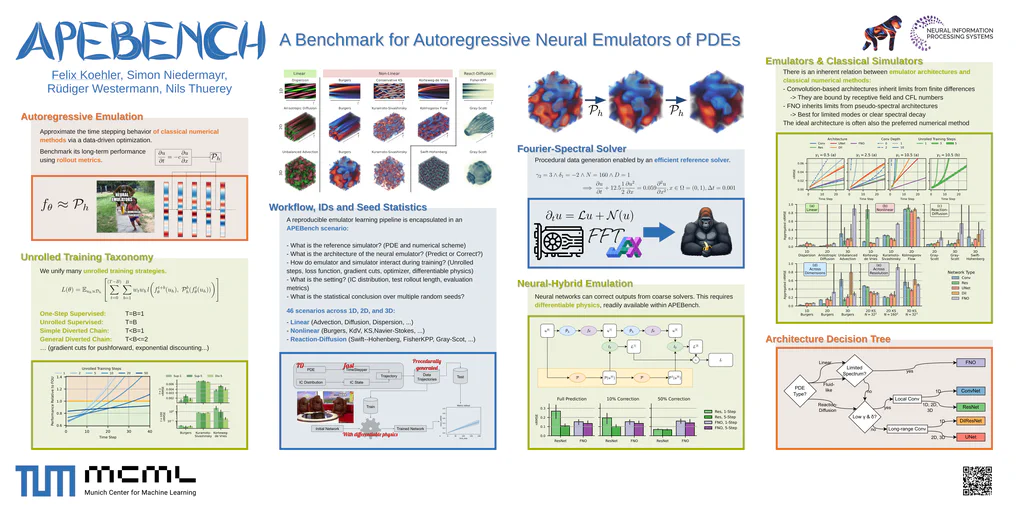

- ApeBench paper + repo

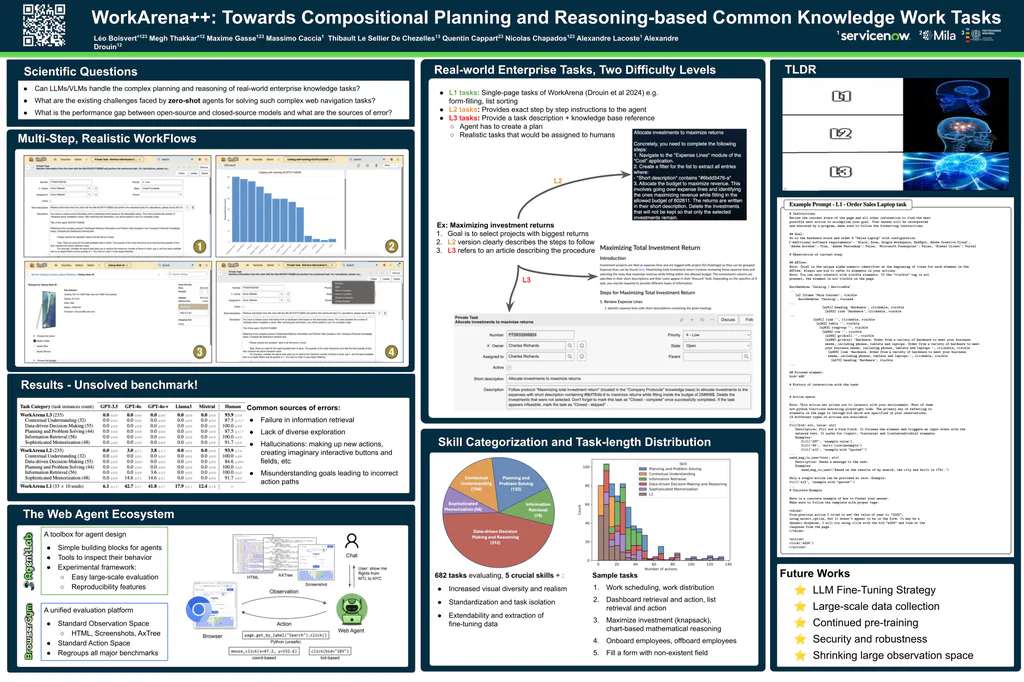

- WorkArena++ paper

Other Sources

- Paper - The Mirage of Artificial Intelligence Terms of Use Restrictions

- Paper relating to performativity - Algorithmic Fairness in Performative Policy Learning: Escaping the Impossibility of Group Fairness

- Jacob Buckman article - Please Commit More Blatant Academic Fraud

- Centre for Future Generations brief - The AI Safety Institute Network

- Margaret Mitchell’s website

- BlueSky